Before Anything Else: The Bare Minimum &%*$ You Need To Know

The EU AI Act creates the world’s first comprehensive AI regulatory framework, with penalties up to €35 million or 7% of global annual turnover. Here’s what you absolutely need to know:

1. Risk Classification is Everything Your AI systems will fall into one of four categories that determine your obligations:

- Unacceptable Risk: Completely banned (social scoring, emotion recognition in workplaces, manipulative systems targeting vulnerabilities)

- High Risk: Stringent requirements (HR systems, credit scoring, educational assessment, critical infrastructure)

- Limited Risk: Transparency obligations (chatbots, deepfakes, GenAI like ChatGPT)

- Minimal Risk: No special requirements (spam filters, inventory management, standard recommendation systems)

2. Critical Timeline

- February 2025: Ban on prohibited systems takes effect

- August 2025: Foundation model (generative AI) requirements become enforceable

- August 2026: Core high-risk system compliance begins

- August 2027: Full compliance required

3. Practical Business Impact If your AI systems are high-risk, you need documentation, risk management, human oversight, and registration in the EU database. For all other systems, focus on risk assessment now to determine where you stand.

Using AI systems (rather than developing them) has fewer requirements, but you’re still responsible for proper usage, oversight, and verification. Take advantage of regulatory sandboxes to test compliance in a safe environment before full implementation.

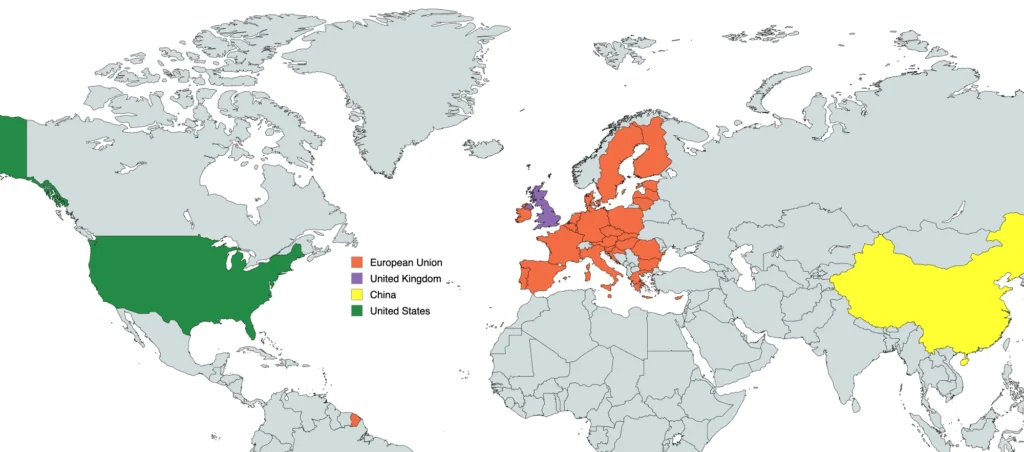

Remember: This isn’t just for EU businesses—any company using AI that affects EU citizens must comply, and most vendors will build their products to meet these standards globally.

Overview

The European Union did an excellent job in establishing the world’s first comprehensive legislative framework for Artificial Intelligence with the EU AI Act. This regulation adopts a risk-based approach, categorizing AI systems based on their potential harm and applying increasingly stringent requirements as risk levels rise. Unlike the sector-specific approaches found in the US or the context-based strategy of the UK, the EU provides a horizontal framework that applies across all industries and use cases.

In essence, they took the approach they had with privacy and GDPR and refit it for AI. Nice.

For medium-sized enterprises ($10M-$100M revenue), the EU AI Act creates clear compliance pathways but introduces significant documentation, testing, and governance requirements for high-risk applications. The framework balances innovation with fundamental rights protection, making risk classification and management central to European AI regulation.

But, but, but… Nobody is pointing at the EU as being a leader in AI *technology,* are they?

The EU has stumbled a bit off the starting line when it comes to AI – much of this act concerns incentives for EU companies, particularly around generative AI. This is great – I applaud that. However, what they got really right was the compliance side of it. They’re just good at this by now, after the slog through the desert that was the march to GDPR.

AI compliance, and thus the EU compliance work touches everyone using AI tools in business, with penalties that aren’t insignificant – up to €35 million or 7% of global annual turnover, whichever is higher.

But wait…wait…hold up. I’m not doing anything that’s going to touch EU citizens or use their data. Ok, that’s fine. Game it out – do you think that the companies providing the product will build a special version just for the people outside of the EU? Nope. It’s going to be just like it is with the web – everybody gets the GDPR warnings, regardless of the fact that they’re sitting in Orlando, FL, looking up the best routes through Disney World. It matters not that this isn’t Euro Disney. You’re going to get the GDPR warnings. And thus, by their efforts, they have put a level in place for the entire world.

Clever.

Key Regulatory Components

Risk-Based Classification

The EU AI Act adopts a tiered, risk-based approach that categorizes AI systems into four levels of risk:

| Category | Description | Examples |

|---|---|---|

| Unacceptable Risk | Systems that pose a clear threat to safety, livelihoods, or fundamental rights are prohibited entirely. |

|

| High Risk | Systems with significant potential to harm health, safety, fundamental rights, or the environment require stringent oversight. |

|

| Limited Risk | Systems that pose specific transparency risks require disclosure obligations. |

|

| Minimal Risk | All other AI systems face minimal or no additional regulation beyond existing laws. Most business AI applications fall into this category. |

|

Requirements for High-Risk AI

High-risk AI systems must meet stringent requirements, including:

- Risk management systems throughout the AI lifecycle

- Data governance and quality management

- Technical documentation and record-keeping

- Transparency and information provision to users

- Human oversight of system operations

- Robustness, accuracy, and cybersecurity

- Registration in the EU database before market placement

- Conformity assessment procedures

Governance and Enforcement

The EU AI Act establishes a multi-layered governance structure:

- European Artificial Intelligence Board: Coordinates implementation and develops guidance

- National Supervisory Authorities: Oversee compliance and enforcement at member state level

- Market Surveillance Authorities: Monitor AI systems on the market

- Notified Bodies: Conduct third-party conformity assessments for high-risk systems

Enforcement includes penalties of up to €35 million or 7% of global annual turnover (whichever is higher) for the most serious violations.

GPAI (Foundation Model) Provisions

The Act includes specific requirements for general-purpose AI models with systemic risk, including:

- Model documentation and evaluation

- Adversarial testing

- Systemic risk assessment and mitigation

- Cybersecurity measures

- Energy efficiency reporting

- Serious incident notification

| Date | Event | Impact on Medium-Sized Enterprises |

|---|---|---|

| March 14, 2024 | EU AI Act formally adopted by European Parliament | Finalized legal text, enabling compliance planning |

| August 2, 2024 | EU AI Act entered into force | Official start of implementation timeline |

| February 2, 2025 | Prohibitions on unacceptable risk AI systems apply | Ban on prohibited practices takes effect |

| August 2, 2025 | General-purpose AI model obligations and penalties apply | Requirements for foundation models become enforceable |

| August 2, 2026 | First phase of high-risk AI system regulations apply | Core compliance requirements for high-risk systems begin |

| Q1-Q4 2026 | Expected publication of sectoral guidance | Clarification on implementation in specific domains |

| August 2, 2027 | Second phase of high-risk AI system regulations apply | Full compliance required for all aspects of the AI Act |

| 2026-2028 | First formal enforcement actions expected | Precedents will be set for implementation standards |

Mid-Market AI Regulatory Requirements and Deadlines

| Requirement | Description | Implementation Timeline | Penalties |

|---|---|---|---|

| Risk Assessment | Determine the risk category of your AI system and relevant obligations | Immediate planning needed; formal requirements apply from Feb/Aug 2025 | Varies by risk level |

| Prohibited Use Evaluation | Verify no AI uses fall under prohibited categories | February 2, 2025 | Up to €35M or 7% of global annual turnover |

| Data Governance | Implement data quality controls and appropriate datasets for training/validation | August 2, 2026 | Up to €15M or 3% of global annual turnover for high-risk systems |

| Technical Documentation | Create and maintain comprehensive AI system documentation | August 2, 2026 | Up to €15M or 3% of global annual turnover for high-risk systems |

| Transparency | Provide clear information to users about AI capabilities and limitations | August 2, 2026 | Varies by risk level (€7.5M/1% for limited risk) |

| Human Oversight | Implement mechanisms for meaningful human supervision | August 2, 2026 | Up to €15M or 3% of global annual turnover for high-risk systems |

| Risk Management | Establish ongoing risk assessment and mitigation processes | August 2, 2026 | Up to €15M or 3% of global annual turnover for high-risk systems |

| Quality Management | Develop quality management systems for high-risk AI | August 2, 2027 | Up to €15M or 3% of global annual turnover |

| Accuracy & Robustness | Ensure appropriate levels of accuracy and resilience to errors/attacks | August 2, 2026 | Up to €15M or 3% of global annual turnover for high-risk systems |

| Registration | Register high-risk systems in the EU database | August 2, 2026 | Up to €15M or 3% of global annual turnover |

| Conformity Assessment | Complete self-assessment or third-party verification as required | August 2, 2026 | Up to €15M or 3% of global annual turnover |

| Post-Market Monitoring | Implement system for tracking and addressing issues after deployment | August 2, 2027 | Up to €15M or 3% of global annual turnover |

| GPAI Model Obligations | Meet specific requirements for large foundation models | August 2, 2025 | Up to €35M or 7% of global annual turnover |

Impact by Industry Vertical

| Vertical | Impact Level | Key Considerations |

|---|---|---|

| Healthcare | Very High | |

| Financial Services | High | |

| Human Resources | High | |

| Retail/E-commerce | Moderate | |

| Manufacturing | Moderate to High | |

| Education | High | |

| Transportation | High | |

| Legal Services | High | |

| Automotive | High |

Comparison with Other Regulatory Frameworks

Comparison with US Approach

| Aspect | EU AI Act | US Approach |

|---|---|---|

| Regulatory Philosophy | Comprehensive horizontal framework with risk-based categorization | Sector-specific regulations with emphasis on industry self-regulation |

| Implementation | Centralized requirements with harmonized implementation | Distributed across agencies with varying enforcement priorities |

| Legal Authority | Dedicated comprehensive legislation with clear prohibitions | Existing laws applied to AI with limited new legislation |

| Risk Classification | Formal risk categories with specific obligations for each | Informal/agency-specific risk determinations |

| Penalties | Standardized penalties up to 7% of global turnover | Varied by sector and violation type |

| Innovation Balance | More prescriptive approach balanced with innovation support | Strong emphasis on innovation with targeted interventions |

Comparison with UK Approach

| Aspect | EU AI Act | UK Approach |

|---|---|---|

| Regulatory Philosophy | Horizontal framework with fixed risk categories | Context-based approach emphasizing existing regulators |

| Legal Foundation | Comprehensive single legislation | Principles applied through existing regulatory frameworks |

| Implementation | Centralized requirements coordinated through AI Board | Sector-specific implementation through existing regulators |

| Technical Specificity | Detailed requirements defined in legislation | Guidance and principles with sector-specific interpretation |

| Innovation Balance | Detailed compliance requirements with sandboxing provisions | Explicitly pro-innovation with cross-sector principles |

| Global Influence | Setting de facto global standards for AI compliance | Seeking differentiated approach focusing on practical application |

Comparison with China's Approach

| Aspect | EU AI Act | China's Approach |

|---|---|---|

| Regulatory Philosophy | Horizontal framework with risk-based categorization | Vertical, sector-specific regulations with strong government oversight |

| Content Control | Focus on transparency and avoiding harm | Strict prohibitions and content moderation requirements |

| Data Governance | Emphasis on data quality and privacy protection | Stringent data localization and security requirements |

| Enforcement | Distributed across national authorities | Centralized through CAC and other authorities |

| Innovation Balance | Attempts to balance innovation with protection of rights | National security and stability prioritized over unrestricted innovation |

Resources for Medium-Sized Enterprises

Special Considerations: Using AI vs. Developing AI

One key aspect of the EU AI Act is the distinction between companies that merely use AI systems versus those that develop them. This distinction affects compliance requirements significantly:

Key Differences:

- USERS primarily need to focus on proper usage, oversight, and verification

- DEVELOPERS need to meet more comprehensive requirements including documentation, conformity assessments, and technical standards

- DEVELOPERS have access to special support measures like:

- Simplified technical documentation

- Priority access to regulatory sandboxes

- Reduced conformity assessment fees

- Dedicated guidance channels

| Timeline | SMEs USING AI | SMEs DEVELOPING AI |

|---|---|---|

| Immediate Priorities (Q1-Q2 2025) |

|

|

| Medium-Term Actions (Q3 2025-Q3 2026) |

|

|

| Documentation Requirements |

|

|

| Training Requirements |

|

|

| Compliance Monitoring |

|

|

Regulatory Sandboxes

The EU AI Act introduces “AI Regulatory Sandboxes” – controlled, real-world testing environments where businesses (especially SMEs) can develop, train, and validate innovative AI systems before market deployment. This is an important concept, one that you’re going to see a lot, and one that you should *absolutely* make use of if it is applicable to you.

These sandboxes provide a safe space to test AI applications without facing full regulatory penalties, prioritize access for SMEs and startups, allow experimentation with innovative AI solutions, and provide direct interaction with regulators for guidance.

I mean, don’t take your cryptoscam system there. Well, on second thought, yes. Please do take it there. You want to make sure that you pass regulatory approval to reach the most, uh, “clients.” The regulators will be surprisingly helpful.

Surprise!

Random Table of Useful Information

Pretty much just what it says it is. Contacts and links and such to help you make it through this process.

| Category | Resource | Description | Access |

|---|---|---|---|

| Official Guidance and Support | European Commission AI Office |

| Website: European Commission AI |

| AI Regulatory Sandboxes |

| Contact through national regulatory authorities | |

| AI Pact for SMEs |

| Website: AI Pact | |

| Technical Standards and Tools | CEN-CENELEC AI Standards |

| Website: CEN-CENELEC AI |

| EU AI Registry |

| Expected launch: Early 2026 | |

| ENISA Cybersecurity Guidance for AI |

| Website: ENISA AI Security | |

| Industry Resources | European AI Alliance |

| Website: European AI Alliance |

| Industry Codes of Conduct |

| Expected publication: 2025-2026 | |

| EU-US Trade and Technology Council Resources |

| Website: EU-US TTC |

Implementation Strategy for Mid-Sized Enterprises

For medium-sized enterprises ($10M-$100M revenue) operating in or selling to the EU, an effective implementation strategy might include…. (Ok, look. I know the following list is is super-generic, but I’d have to know more about your specific company in order to make actual, hard recommendations). Generic or not, you absolutely will need to tick off all of these boxes. Maybe you say, “Ok, we don’t really have any high-risk systems.” And I say – that’s awesome. Are you absolutely positive? Ok, great. You’re done with #1, and you can probably tick off #6 (nice).

- Risk Classification: Determine which risk category your AI systems fall into using the EU AI Act criteria

- Gap Analysis: Compare existing practices against requirements for your risk category

- Implementation Roadmap: Create a phased plan aligned with the EU AI Act timeline

- Documentation System: Establish processes for creating and maintaining technical documentation. Note how this isn’t last, as it always seems to be. “Documentation by code” is not cool.

- Data Governance: Implement appropriate data quality and governance measures. (I don’t care. Yes, that’s the answer to “but we don’t have any sensitive data.” I don’t – you need policies for your data, full stop. These could be just a few lines exchanged in an email, they just need to be written down and agreed to.)

- Human Oversight: Design and implement human oversight mechanisms for high-risk systems

- Monitoring System: Create processes for post-market monitoring and incident reporting

- Supplier Management: Ensure AI components from third parties meet applicable requirements

Medium-sized enterprises should prioritize compliance for high-risk systems first, while progressively implementing appropriate measures for lower-risk applications in line with the phased implementation timeline of the AI Act. Leveraging regulatory sandboxes, industry associations, and official guidance can help manage compliance costs while ensuring effective implementation.