Overview

The United States takes a sector-specific approach to AI regulation, balancing innovation with safety through a combination of federal guidance, agency enforcement, and emerging state-level frameworks.

In case you haven’t been watching the spectacle that is US politics, we’ve recently gotten a new president and a very anti-regulatory executive branch. What this means, from a practical perspective, is that there are a few regulatory bodies that have specific authority over AI in their realms (FTC – copyright, FDA – medical devices)

We do expect a new executive order early this summer. Anyone reasonable is anticipating something that will be aggressively pro-us-business, with serious concerns around foreign competition – both inter-governmental and inter-business.

Unlike the EU’s comprehensive AI Act, the US regulatory landscape is characterized by a patchwork of existing laws applied to AI use cases, voluntary guidelines, and targeted interventions in high-risk domains. For mid-sized enterprises ($10M-$100M revenue), this creates both flexibility and complexity, particularly when operating across multiple states.

The US approach emphasizes industry self-regulation while maintaining oversight through agencies like the Federal Trade Commission (FTC), which has active enforcement authority over deceptive or unfair AI practices.

The real action in the United States is right there in the name. States. And that’s why I have a whole section just on the States part of United States.

But, until the inevitable controversy** that will be the AI policy of this administration, here’s an overview of where things are at.

**Look, I’m not taking a particular political stance here – there is just no possible way that this will end up being non-controverial. It really doesn’t matter who is president, this was always going to be controversial, AI is that kind of topic. However, I expect this to be very, very controversial. I would anticipate aggressive limitations on commerce between ourselves and, say, just to pick a random country – China. GPU sales limitations, limits on use of foreign AI services, limits on what we provide to those countries with whom we are locked in a serious AI competition. I also would expect it to benefit Grok in some way, as Tesla has also had visibility/marketing/Presidential support.

You would do well to look and see where they’re trying to go with Grok and see how that affects you. I don’t have a solid analysis yet, and it might not be worth doing given that it’s only 60 days until it is all announced. I’m not trying to help make stock market bets, so, we’ll see.

Timeline

| Date | Event | Description |

|---|---|---|

| August 2019 | NIST Report | U.S. Leadership in AI: A Plan for Federal Engagement in Developing Technical Standards and Related Tools published, outlining areas for AI standards development. Source |

| Mid-2020 | FTC Guidance | FTC begins providing guidance on AI governance, focusing on companies' use of generative AI tools. Source |

| October 2022 | White House Blueprint | Blueprint for an AI Bill of Rights released, emphasizing ethical AI use principles. Source |

| January 2023 | NIST AI RMF | NIST AI Risk Management Framework released, providing voluntary guidance for managing AI risks. Source |

| August 2023 | NIST AI RMF 2.0 | NIST AI Risk Management Framework 2.0 released, enhancing AI risk management guidelines. Source |

| October 2023 | Executive Order 14110 | White House issues Executive Order on Safe, Secure, and Trustworthy Development and Use of AI, emphasizing responsible AI practices. Source Additional Source |

| December 2023 | FTC Enforcement | FTC settles action against Rite Aid regarding AI bias and discrimination. Source |

| January 2025 | Executive Order 14179 | President Trump issues Executive Order "Removing Barriers to American Leadership in Artificial Intelligence," focusing on deregulation and promoting AI development. Source Additional Source |

| July 2025 (Planned) | AI Policy Release | Trump Administration plans to release a new AI policy to enhance national AI development and security. Source |

US Federal Level AI Regulatory Bodies

FTC Enforcement Authority

The FTC serves as the primary federal AI enforcement agency, applying Section 5 of the FTC Act to address unfair or deceptive AI practices. The agency has been particularly active in addressing:

- False or misleading claims about AI capabilities

- AI systems that produce discriminatory outcomes

- Deceptive data collection practices for AI training

- Inadequate disclosure of AI use in consumer interactions

Through Operation AI Comply (launched September 2024), the FTC has established legal precedents for AI enforcement using existing consumer protection laws. Source

NIST Risk Management Framework

The National Institute of Standards and Technology (NIST) provides voluntary but influential guidance through its AI Risk Management Framework (RMF). This framework offers:

- A structured process for identifying, assessing, and managing AI risks

- Governance best practices for responsible AI deployment

- Technical guidelines for testing AI systems

- Documentation standards for demonstrating compliance

While not legally binding, NIST standards are often referenced by regulators and courts when evaluating the reasonableness of AI risk management practices. Source

Commerce Department AI Safety Institute

Established in November 2023, the AI Safety Institute develops technical guidance for AI safety and security, focusing on:

- Evaluation frameworks for large language models

- Testing protocols for generative AI capabilities

- Guidelines for managing risks from frontier AI systems

- Best practices for AI risk documentation and disclosure

Source

Sectoral Federal Regulations

Various federal agencies apply existing regulatory frameworks to AI systems in their domains:

- FDA: Regulates AI medical devices through its Software as a Medical Device (SaMD) framework Source

- HUD/CFPB: Address AI use in housing and lending decisions through fair lending and housing laws Source

- DOT: Developing guidelines for autonomous vehicles and AI in transportation Source

- EEOC: Enforces laws against AI-enabled discrimination in employment Source

USA AI Federal Regulatory Compliance Summary Table

| Requirement | Description | Implementation Timeline |

|---|---|---|

| Consumer Disclosure | Clearly disclose when AI is being used in consumer-facing applications | Immediate, enforceable under FTC deception authority |

| Algorithmic Impact Assessment | Evaluate high-risk AI systems for potential discriminatory impacts | Required for federal contractors; best practice for private sector |

| AI Documentation | Maintain records of training data sources, testing methodologies, and known limitations | Best practice now; likely to become mandated for high-risk systems by 2026 |

| Truth in AI Marketing | Ensure marketing claims about AI capabilities are substantiated and not misleading | Immediate, enforced through FTC Operation AI Comply |

| State-Specific Disclosures | Implement required notices and consent mechanisms for specific states | Varies by state (CA: Jan 2026; CO: Feb 2026; IL: Already effective) |

| Content Authentication | For user-facing generative AI, implement content provenance and disclosure mechanisms | Required in California by Jan 2026; best practice elsewhere |

| Security Safeguards | Implement protection against adversarial attacks, prompt injection, and data leakage | Best practice now; formal guidelines expected by end of 2025 |

| Human Oversight | Maintain meaningful human supervision for critical AI decision systems | Required in regulated sectors (finance, healthcare, employment) |

Impact by Industry Vertical

| Vertical | Impact Level | Key Considerations |

|---|---|---|

| Healthcare | High | |

| Financial Services | High | |

| Human Resources | High | |

| Marketing/Media | Moderate to High | |

| Retail/E-commerce | Moderate | |

| Manufacturing | Low to Moderate | |

| Education | Moderate | |

| Transportation | Moderate to High | |

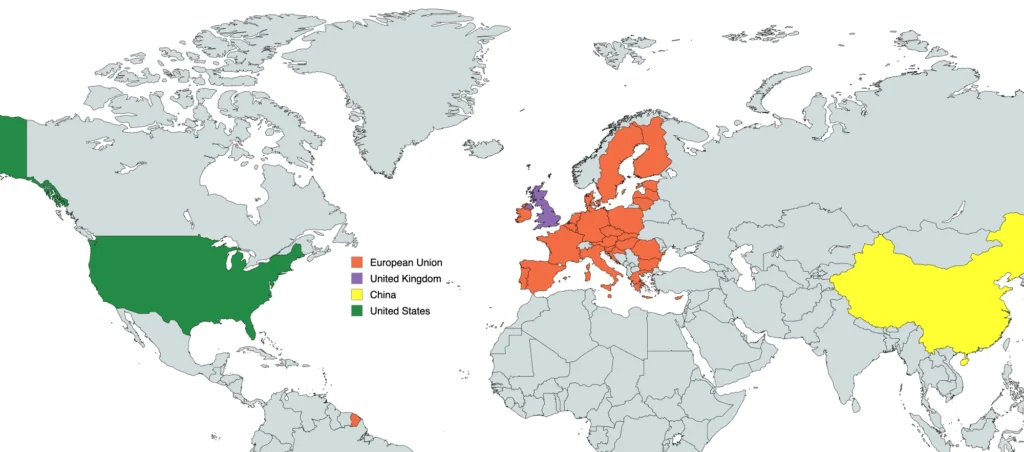

Comparison with Other AI Frameworks

United States vs. European Union (US vs. EU)

| Aspect | US Approach | EU AI Act |

|---|---|---|

| Regulatory Philosophy | Sector-specific regulations with emphasis on industry self-regulation | Comprehensive horizontal framework with risk-based categorization |

| Implementation | Distributed across agencies with varying enforcement priorities | Centralized requirements with harmonized implementation |

| Legal Authority | Existing laws applied to AI with limited new legislation | Dedicated comprehensive legislation with clear prohibitions |

| Risk Classification | Informal/agency-specific risk determinations | Formal risk categories with specific obligations for each |

| Penalties | Varied by sector and violation type | Standardized penalties up to 7% of global turnover |

| Innovation Balance | Strong emphasis on innovation with targeted interventions | More prescriptive approach balanced with innovation support |

United States vs. United Kingdom (US vs. UK)

| Aspect | US Approach | UK Approach |

|---|---|---|

| Regulatory Philosophy | Sector-specific approach with limited new legislation | Pro-innovation context-based approach |

| Implementation | Agency-driven enforcement of existing laws | Existing regulators applying AI principles to their domains |

| Technical Standards | NIST guidelines as voluntary frameworks | UK AI Safety Institute research with practical guidance |

| Enforcement | FTC and sectoral regulators acting independently | Coordinated through Digital Regulation Cooperation Forum |

| Data Protection | Sector-specific privacy laws applied to AI | Comprehensive data protection framework (UK GDPR) |

| Innovation Support | Research funding with limited regulatory coordination | Integrated innovation and regulatory approach |

United States vs. China

| Aspect | US Approach | China's Approach |

|---|---|---|

| Regulatory Model | Principle-based guidance with sector-specific rules | Comprehensive, prescriptive regulations |

| Government Role | Limited federal regulation with emphasis on industry self-regulation | Strong central oversight and intervention |

| Content Regulation | First Amendment protection for most content | Explicit restrictions on certain content |

| Data Controls | Limited data localization requirements | Mandatory data localization |

| Development Focus | Market-driven approach with government support for research | Strategic advancement of AI capabilities in priority sectors |

Resources for Medium-Sized Enterprises

Compliance Tools and Frameworks

The US ecosystem offers several resources to help medium-sized enterprises navigate the AI regulatory landscape:

NIST AI Risk Management Framework

- Provides a voluntary but comprehensive approach to AI governance

- Includes assessment tools and documentation templates

- Helps demonstrate due diligence in risk management

- Website: NIST AI RMF

FTC Business Guidance on AI

- Offers clear guidelines on avoiding unfair or deceptive AI practices

- Includes case studies from enforcement actions

- Regularly updated with new guidance

- Website: FTC Business Blog on AI

State AI Law Trackers

- Resources that monitor and summarize state-level AI regulations

- Help businesses identify applicable state requirements

- Examples include:

- IAPP US State AI Governance Legislation Tracker

- Husch Blackwell AI State Law Tracker

Government Resources

AI.gov Portal

- Central hub for federal AI initiatives

- Access to cross-agency resources and guidance

- Links to agency-specific AI frameworks

- Website: AI.gov

US AI Safety Institute

- Research and guidelines on frontier AI systems

- Technical papers on AI evaluation and testing

- Resources for responsible AI deployment

- Website: AI Safety Institute

FDA AI/ML Medical Device Resources

- Guidance for AI in healthcare applications

- Regulatory frameworks for Software as a Medical Device

- Website: FDA AI/ML Resources

Industry Alliances and Public-Private Partnerships

Partnership on AI

- Multi-stakeholder organization developing best practices

- Working groups on key AI governance challenges

- Resources for responsible AI implementation

- Website: Partnership on AI

AI Alliance

- Open community of organizations advancing open and trustworthy AI

- Resources for responsible AI development

- Collaboration on technical standards and best practices

- Website: AI Alliance

Industry-Specific Consortia

- Vertical-specific guidance and resource sharing:

- Financial Data Exchange (financial services)

- Digital Medicine Society (healthcare)

- Consumer Technology Association (consumer products)

Implementation Strategy for Mid-Sized Enterprises

For medium-sized enterprises ($10M-$100M revenue) operating in the US, an effective compliance strategy might include:

- Baseline Assessment: Evaluate current AI applications against NIST AI RMF standards

- State Mapping: Identify applicable state regulations based on customer and operational footprint

- Vertical-Specific Compliance: Prioritize compliance with regulations specific to your industry

- Documentation System: Establish consistent documentation of AI development, testing, and deployment

- Monitoring Program: Create a process to track evolving federal guidance and state legislation

- Risk-Based Priorities: Focus resources on high-risk AI applications with greatest potential for harm

- Vendor Management: Ensure AI technology vendors can support compliance requirements

- Regular Training: Maintain staff awareness of AI ethics and compliance expectations

This approach enables medium-sized enterprises to navigate the complex US regulatory landscape while maintaining the flexibility to innovate that the US system generally allows.