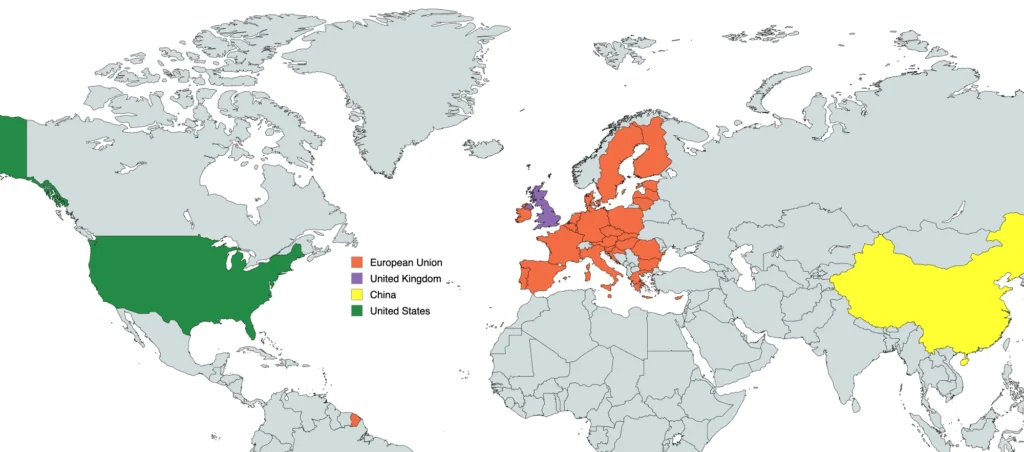

European Union

1 of 4

United Kingdom

2 of 4

United States

3 of 4

China

4 of 4

Overview

The United Kingdom has adopted a distinctive “pro-innovation” approach to AI regulation that contrasts with both the comprehensive legislation of the EU and the sector-specific approach of the US. The UK framework is characterized by its context-based methodology, which relies on existing regulators applying cross-cutting principles to their respective sectors rather than creating a new overarching law. This approach aims to maintain regulatory agility while addressing AI risks through established regulatory frameworks.

For medium-sized enterprises ($10M-$100M revenue), the UK approach offers flexibility and reduced compliance burden compared to the EU AI Act, while still maintaining meaningful oversight. The framework emphasizes proportionality, with regulatory intervention scaled to the specific context and risk profile of AI applications. This creates a business environment that encourages innovation while requiring appropriate safeguards tailored to each sector’s unique risks and requirements.

UK Regulatory Timeline

| Date | Event | Impact on Medium-Sized Enterprises |

|---|---|---|

| March 2023 | UK AI Regulation White Paper published | Established pro-innovation, context-based regulatory approach |

| November 2023 | UK AI Safety Summit held at Bletchley Park | Focused international attention on frontier AI safety |

| November 2023 | AI Safety Institute established | Created resource for evaluating advanced AI systems |

| January 2025 | UK AI Opportunities Action Plan published | Reinforced pro-innovation approach with practical support measures |

| April 2025 | Regulators' AI coordination framework published | Provided clarity on sectoral regulatory approaches |

| September 2025 | Online Safety Act AI provisions fully effective | Set requirements for AI content generation and identification |

| October 2025 | Financial Conduct Authority AI guidance finalized | Established expectations for AI use in financial services |

| 2025-2026 | Additional sectoral regulators' guidance expected | Will provide domain-specific implementation requirements |

Key Regulatory Components

Context-Based Regulatory Approach

The UK’s framework relies on existing regulators to adapt and apply AI principles within their sectors, creating a flexible approach that can evolve with technology. This includes:

- Sector-specific guidance from established regulatory bodies

- Adaptation of existing laws and regulations to AI applications

- Emphasis on outcomes rather than prescriptive technical requirements

- Regular coordination between regulators to ensure consistency

Cross-Cutting AI Principles

While implementation varies by sector, the UK has established five core principles that all regulators apply to AI governance:

- Safety, Security and Robustness: AI should function in a secure and reliable way with risks identified and mitigated

- Appropriate Transparency and Explainability: Organizations should provide appropriate information about how AI systems work and the processes surrounding them

- Fairness: AI should not discriminate unfairly against protected groups or create unjustified disparate outcomes

- Accountability and Governance: Organizations should ensure appropriate accountability for outcomes produced by AI systems

- Contestability and Redress: Mechanisms should be in place for users to challenge harmful outcomes from AI systems

AI Safety Institute

The UK has established the AI Safety Institute to research and evaluate risks from advanced AI systems, particularly foundation models. The institute:

- Conducts technical evaluations of frontier AI systems

- Develops safety testing methodologies

- Publishes research on AI risks and mitigations

- Collaborates internationally with similar institutions

- Provides voluntary evaluation frameworks for advanced AI

Online Safety Act’s AI Provisions

The Online Safety Act, fully implemented in 2025, includes requirements for AI systems that generate content, requiring:

- Identification of AI-generated content

- Risk assessment for generative AI tools

- Protections against harmful AI-generated content

- Age-appropriate design for AI systems accessible to children

Data Protection and AI

The UK’s data protection framework (UK GDPR and Data Protection Act 2018) applies to AI systems that process personal data, requiring:

- Transparency about automated decision-making

- Data minimization and purpose limitation

- Fairness in processing and outcomes

- Impact assessments for high-risk processing

- Appropriate technical and organizational measures

Mid-Market AI Regulatory Requirements and Deadlines

The UK leaves things really wide open for organizations to define. If you are not in a high-risk sector, the requirements are pretty simple.

| Requirement | Description | Implementation Timeline |

|---|---|---|

| Sectoral Regulator Compliance | Adhere to AI guidance from relevant sector regulators (FCA, CMA, ICO, etc.) | Varies by sector; major guidance published 2025-2026 |

| Risk Assessment | Conduct proportionate risk assessments for AI systems | Best practice now; formal expectations in some sectors by late 2025 |

| Appropriate Transparency | Provide suitable information about how AI systems work | Required under data protection law; additional sectoral guidance through 2025-2026 |

| Documentation | Maintain records of AI development and deployment appropriate to risk level | Varies by sector; higher expectations for regulated industries |

| AI-Generated Content Labeling | Identify content created by AI systems | Required for user-facing content under Online Safety Act by Sep 2025 |

| Data Protection Impact Assessment | Conduct DPIAs for high-risk AI processing personal data | Already required under UK GDPR |

| Fairness Testing | Test AI systems for bias and discrimination | Best practice now; increasing regulatory expectations through 2025-2026 |

| Human Oversight | Maintain appropriate human supervision based on context and risk | Required in high-risk contexts (financial services, healthcare, etc.) |

| Safety Testing | Ensure robust testing of AI systems proportionate to risk | Increasing requirements through 2025-2026, particularly for safety-critical applications |

| Redress Mechanisms | Provide ways for individuals to challenge harmful AI outcomes | Required in regulated sectors; best practice elsewhere |

| Appropriate Transparency | Provide suitable information about how AI systems work | Required under data protection law; additional sectoral guidance through 2025-2026 |

| Documentation | Maintain records of AI development and deployment appropriate to risk level | Varies by sector; higher expectations for regulated industries |

| AI-Generated Content Labeling | Identify content created by AI systems | Required for user-facing content under Online Safety Act by Sep 2025 |

| Data Protection Impact Assessment | Conduct DPIAs for high-risk AI processing personal data | Already required under UK GDPR |

| Fairness Testing | Test AI systems for bias and discrimination | Best practice now; increasing regulatory expectations through 2025-2026 |

| Human Oversight | Maintain appropriate human supervision based on context and risk | Required in high-risk contexts (financial services, healthcare, etc.) |

| Safety Testing | Ensure robust testing of AI systems proportionate to risk | Increasing requirements through 2025-2026, particularly for safety-critical applications |

| Redress Mechanisms | Provide ways for individuals to challenge harmful AI outcomes | Required in regulated sectors; best practice elsewhere |

UK AI Regulatory Impact by Vertical

| Vertical | Impact Level | Key Considerations |

|---|---|---|

| Financial Services | High |

|

| Healthcare | High |

|

| Media & Entertainment | Moderate to High |

|

| Retail & E-commerce | Moderate |

|

| Manufacturing | Low to Moderate |

|

| Education | Moderate |

|

| Transportation | Moderate to High |

|

| Professional Services | Moderate |

|

Comparison with Other Regulatory Frameworks

Comparison with US Approach

| Aspect | UK Approach | US Approach |

|---|---|---|

| Regulatory Philosophy | Pro-innovation context-based approach | Sector-specific approach with limited new legislation |

| Implementation | Existing regulators applying AI principles to their domains | Agency-driven enforcement of existing laws |

| Technical Standards | UK AI Safety Institute research with practical guidance | NIST guidelines as voluntary frameworks |

| Enforcement | Coordinated through Digital Regulation Cooperation Forum | FTC and sectoral regulators acting independently |

| Data Protection | Comprehensive data protection framework (UK GDPR) | Sector-specific privacy laws applied to AI |

| Innovation Support | Integrated innovation and regulatory approach | Research funding with limited regulatory coordination |

Comparison with EU Approach

| Aspect | UK Approach | EU AI Act |

|---|---|---|

| Regulatory Philosophy | Context-based approach emphasizing existing regulators | Horizontal framework with fixed risk categories |

| Legal Foundation | Principles applied through existing regulatory frameworks | Comprehensive single legislation |

| Implementation | Sector-specific implementation through existing regulators | Centralized requirements coordinated through AI Board |

| Technical Specificity | Guidance and principles with sector-specific interpretation | Detailed requirements defined in legislation |

| Innovation Balance | Explicitly pro-innovation with cross-sector principles | Detailed compliance requirements with sandboxing provisions |

| Global Influence | Seeking differentiated approach focusing on practical application | Setting de facto global standards for AI compliance |

Comparison with China's Approach

| Aspect | UK Approach | China's Approach |

|---|---|---|

| Regulatory Philosophy | Pro-innovation, principles-based approach | Prescriptive rules with strict enforcement |

| Implementation | Sector-specific regulation through existing authorities | Comprehensive regulations across multiple domains |

| Content Control | Focus on harmful content with lighter touch regulation | Strict content monitoring and filtering |

| Data Governance | Risk-based approach to data protection | Stringent localization and security requirements |

| Innovation Balance | Emphasis on fostering innovation with appropriate safeguards | Controlled innovation within strategic priorities |

Resources for Medium Sized Enterprises

Government Resources and Support

AI Safety Institute

- Technical research on advanced AI systems

- Evaluation frameworks for foundation models

- Guidance on responsible AI development

- Website: AI Safety Institute

Office for Artificial Intelligence

- Central government hub for AI policy

- Resources on UK’s approach to AI regulation

- Links to sectoral regulators’ guidance

- Website: Office for AI

Digital Regulation Cooperation Forum (DRCF)

- Coordinated regulatory approach across digital and AI issues

- Joint guidance from ICO, CMA, Ofcom, and FCA

- Resources on algorithmic processing and auditing

- Website: DRCF

Sectoral Guidance

Financial Conduct Authority (FCA) AI Resources

- Guidance on AI use in financial services

- Expectations for model governance and explainability

- Requirements for algorithmic trading systems

- Website: FCA Innovation

Information Commissioner’s Office (ICO) AI Guidance

- Guidance on data protection aspects of AI

- Explainability requirements for automated decisions

- AI auditing framework

- Website: ICO AI Guidance

Medicines and Healthcare products Regulatory Agency (MHRA)

- Software as a Medical Device (SaMD) framework

- Guidance on AI in medical devices

- Regulatory pathways for innovative technologies

- Website: MHRA AI Guidance

Standards and Industry Resources

British Standards Institute (BSI) AI Standards

- Technical standards for AI development and deployment

- Guidance on AI ethics and governance

- Frameworks for risk assessment and mitigation

- Website: BSI AI Standards

Alan Turing Institute

- Research and guidance on AI ethics and safety

- Practical implementation of responsible AI

- Sector-specific AI applications research

- Website: Alan Turing Institute

TechUK AI Working Groups

- Industry collaboration on AI implementation

- Best practices for specific sectors

- Engagement with regulatory developments

- Website: TechUK AI

Implementation Strategy for Mid-Sized Enterprises

For medium-sized enterprises ($10M-$100M revenue) operating in the UK, an effective compliance strategy might include:

- Regulatory Mapping: Identify which sectoral regulators oversee your AI applications

- Principles Application: Apply the five cross-cutting principles to your specific context

- Risk Assessment: Conduct proportionate risk assessment based on application context

- Documentation Framework: Implement documentation appropriate to your risk level

- Stakeholder Engagement: Engage with relevant regulators early for innovative applications

- Cross-Border Strategy: Develop approach for managing UK requirements alongside EU/US obligations

- Monitoring System: Track evolving guidance from relevant sectoral regulators

- Industry Collaboration: Participate in industry groups to share implementation best practices

The UK’s approach allows medium-sized enterprises to adopt a proportionate, risk-based compliance strategy that focuses resources on applications with the highest potential for harm while maintaining freedom to innovate in lower-risk areas. By monitoring the evolving guidance from sectoral regulators and participating in industry initiatives, companies can anticipate regulatory developments and maintain appropriate compliance as the framework continues to evolve.

The AI Opportunities Action Plan published in January 2025 also introduces additional support mechanisms for businesses implementing AI, including innovation funding, skills development programs, and regulatory advice services specifically designed for medium-sized enterprises. These resources can help companies not only comply with requirements but actively leverage the UK’s pro-innovation environment to develop competitive AI applications.