Here’s where the rubber meets the road. I was going to continue with a water analogy from earlier articles, but then I got scared by Perplexity (more on that in a different article) and so let’s stay way, way away from water analogies and get down to brass tacks.

The whole point of this article is to give you a roadmap (or at least the shell of one) to get you through planning for your first retrieval augmented generation project. The following roadmap attempts to be as pragmatic as possible by leveraging 3 things:

- Some reasonable estimations from my decades of experience with projects like this

- The current state-of-the-art

- The inherent “how long is a piece of string” unknowns about your project proper

And I’m putting one key restriction in place: No large-enterprise-sized budgets required, just smart planning and execution.

The 60-Day Challenge

This is the overall timeline that we’re going to be discussing below. I’d like you to note a few things. First is that delivery of the first, working, system is only 60 days from “go.” This is important. Do not, and I really mean this, do not get hung up on trying to figure out and solve all the minor problems ahead of time. Sure, you’ll get a bunch of minor problems, and some big ones that you didn’t expect. But that’s the whole point. This sort of implementation is different enough that it will be inherently hard for you to anticipate all the things that can happen.

The best way to understand is to buckle down and do it. Drop features if you need to, scale back the scope of content, disappoint your boss, do whatever you need; but have a working system within 60 days. I promise that your boss’s disappointment will evaporate when you have something offering real value.

There’s nothing magical about 60 days—it’s just round, and it puts a cap on things so that you focus on getting something into users’ hands. Because once they start with it, I guarantee—upon my Capacitor of Accountability—your project requirements are going to change. And that will ripple through your plan in unpredictable ways.

This isn’t like a simple upgrade to an email client – RAG/genAI is a new class of tool, and it comes with a heaping helping of twists and turns.

The Cheating (aka, Stuff to Decide BEFORE the Clock Starts)

Part of what’s going to make our 60-day thing work is that we’re going to make a couple decisions before we officially start the clock. Some may consider this to be cheating. I just look at it as a pragmatic interpretation of the rules. Plus, I’m making the rules, so…

Decision 1) What Problem are you Trying to Solve

Look, this will never work if you’re like…”Hm, RAG sounds nice” and then you start the 60-day clock. Really, you put the RAG bit of this aside and say – “Do we have something that is amenable to generative AI, but that AI doesn’t quite know enough about the context of the situation to be useful?” Or, maybe you already have something where you’re already using genAI and you have some information that could help make the genAI even better. If you don’t have an application in mind, go back to the previous articles to see if anything gets your juices flowing.

Decision 2) What are the User Journeys? What are the User Flows?

I will not make this article a masterclass in specifying and scheduling a project. I’m happy to do more of it as we go, but this isn’t my core expertise. I’ve been involved with, er, probably 50? And I’ve run a dozen+ relatively significant projects.

Define one, simple, user journey. Define 1-5 user flows.

What’s the difference? A user journey is a broader, wide-ranging view of all the different touchpoints it took for the user to get to a certain point – the emotions at each, the decisions that led to the goal. User flows are the actions the user takes at one particular stage, the fine-grained “and then they clicked here” interactions.

And this is why I suggest one (very simple) journey, one to five flows. At the very minimum, just do one flow. You don’t have to go all crazy UX-design-wise. Just make sure you’ve thought through at least one end-to-end, start-to-finish experience. It’s the micro equivalent of what we’re doing on a macro scale with this entire project – just do one because you learn so much.

The flow should include logging in, finding the search/chat, interacting, parsing the answer, etc.

IMPORTANT: Complete these user journeys and flows BEFORE starting your 60-day clock. This pre-work is essential for a focused implementation.

The A Team / B Team Leapfrog Approach

One of the most effective ways to implement your RAG system within the 60-day window is to use an A Team / B Team leapfrog approach. This strategy creates two parallel teams with different focus areas that work in tandem, passing the baton back and forth at key points.

Team Structure

Team A (Technical Foundation)

- Focus: Data engineering, integration, system architecture

- Key Responsibilities:

- Data preparation and pipeline development

- Vector database setup

- API integrations

- Security implementation

- Performance optimization

Team B (Business Application)

- Focus: Use case development, user experience, business value

- Key Responsibilities:

- Prompt engineering

- User interface/experience

- Training and documentation

- Feedback collection and implementation

- Success metrics tracking

Why This Works

This approach offers several advantages:

- Continuous Progress: While one team is waiting for feedback or approvals, the other can be moving forward

- Specialized Focus: Teams can develop different expertise areas

- Risk Mitigation: If one team encounters blockers, the other can still make progress

- Knowledge Redundancy: Creates multiple experts rather than single points of failure

- Accelerated Timeline: Potential to compress the 60-day timeline

gantt

title RAG Implementation - A/B Team Leapfrog Approach

dateFormat YYYY-MM-DD

section Team A (Technical)

Data Assessment :a1, 2025-04-01, 14d

Solution Evaluation :a2, after a1, 14d

System Integration:a3, after a2, 14d

Performance :a4, after a3, 14d

section Team B (Business)

Success Metrics :b1, 2025-04-01, 14d

Prompt Design :b2, after b1, 14d

Testing :b3, after b2, 14d

Training & Feedback :b4, after b3, 14d

section Convergence

Launch :c1, after a4, 7d

Making the A/B Team Approach Work

For the A/B team leapfrog approach to work effectively, you need solid coordination mechanisms:

- Daily Standups: Brief cross-team updates (15 minutes max)

- Bi-Weekly Handoffs: Formal knowledge transfer sessions

- Shared Documentation: Central repository for decisions and progress

- Clear Dependencies Map: Visual representation of how Team A’s work impacts Team B and vice versa

- Executive Sponsor: Single decision-maker for cross-team conflicts

flowchart LR

subgraph "Team A (Technical)"

A1[Data Assessment] --> A2[Solution Architecture]

A2 --> A3[System Integration]

A3 --> A4[Performance Optimization]

end

subgraph "Team B (Business)"

B1[Success Metrics Definition] --> B2[Prompt Engineering]

B2 --> B3[Testing]

B3 --> B4[Training & Feedback]

end

A1 -.-> B1

A2 -.-> B2

A3 -.-> B3

B2 -.-> A3

B3 -.-> A4

The Actual Implementation Timeline

Let’s break down the 60-day implementation into specific phases, tasks, and timeframes:

Phase 1: Assess Data Readiness (Weeks 1-2)

| Task Name | Team | Start | Hours |

|---|---|---|---|

| Data Inventory | A | Week 1 | 40 |

| Quality Evaluation | A | Week 1 | 32 |

| Identify Key Sources | A | Week 2 | 24 |

| Set Success Metrics | B | Week 1 | 12 |

| Create 30-60-90 Plan | B | Week 2 | 24 |

Key Activities for Team A:

- Inventory your existing data sources (documents, knowledge bases, CRM, etc.)

- Evaluate data quality, completeness, and accessibility

- Identify high-value data sources for initial implementation

- Assess security and compliance requirements

Key Activities for Team B:

- Set clear success metrics for the initial phase

- Create a 30-60-90 day implementation plan

- Assemble a cross-functional team with business and IT representation

Practical Tip: Focus on data quality over quantity. A well-organized subset of your information will yield better results than massive volumes of disorganized data.

Red Flag Check: If more than 30% of your critical business information exists only in people’s heads or in highly unstructured formats, consider a preliminary knowledge capture project before full RAG implementation. Think of it as cleaning your room before inviting the robot vacuum in—otherwise, it’ll just get confused and bump into things.

Phase 2: Technical Planning & Design (Weeks 3-4)

| Task Name | Team | Start | Hours |

|---|---|---|---|

| Evaluate Solutions | A | Week 3 | 32 |

| Compare Costs | A | Week 3 | 16 |

| Make Build/Buy Decision | A | Week 4 | 4 |

| Data Processing Pipeline Design | A | Week 4 | 24 |

| Prompt Engineering Strategy | B | Week 3 | 20 |

| UI/UX Design | B | Week 3-4 | 32 |

| Evaluation Framework | B | Week 4 | 16 |

Key Activities for Team A:

- Evaluate commercial RAG solutions with mid-market focus

- Compare costs, implementation requirements, and timelines

- Assess technical expertise required for each option

- Consider integration capabilities with your existing systems

Key Activities for Team B:

- Design initial prompt templates

- Create mockups of user interfaces

- Develop evaluation criteria for system performance

- Plan user testing approach

Practical Tip: For most mid-market companies, starting with a commercial RAG platform provides faster time-to-value than building a custom solution from scratch.

Cost Consideration: Budget $150,000 for initial implementation of a commercial mid-market RAG solution versus $500,000+ for custom development, with ROI typically coming 3-4x faster with commercial options.

Phase 3: Implementation & Integration (Weeks 5-6)

| Task Name | Team | Start | Hours |

|---|---|---|---|

| System Integration | A | Week 5 | 40 |

| Auth & Security | A | Week 5-6 | 24 |

| Data Pipeline Setup | A | Week 6 | 24 |

| Initial Deployment | B | Week 5 | 40 |

| Prompt Refinement | B | Week 6 | 16 |

| Test Case Development | B | Week 6 | 20 |

Key Activities for Team A:

- Integrate the RAG system with existing infrastructure

- Implement authentication and security measures

- Set up data ingestion, chunking, and embedding pipeline

- Configure vector database and retrieval mechanisms

Key Activities for Team B:

- Deploy initial version in controlled environment

- Refine prompts based on early testing

- Develop comprehensive test cases

- Begin documentation for users

Practical Tip: Set up a regular sync between Teams A and B to ensure technical implementation aligns with business requirements.

Phase 4: Testing & Refinement (Weeks 7-8)

| Task Name | Team | Start | Hours |

|---|---|---|---|

| Performance Optimization | A | Week 7 | 32 |

| Data Update Process | A | Week 7-8 | 20 |

| Security Validation | A | Week 8 | 16 |

| Pilot Group Training | B | Week 7 | 24 |

| Feedback Collection | B | Week 7-8 | 20 |

| Performance Refinement | B | Week 8 | 32 |

Key Activities for Team A:

- Optimize retrieval performance

- Implement processes for regular data updates

- Validate security measures

- Address technical issues identified in testing

Key Activities for Team B:

- Train pilot user group

- Collect structured feedback

- Refine system based on user input

- Prepare for wider rollout

Practical Tip: Create a formal feedback loop where pilot users can report issues and suggest improvements.

Phase 5: Convergence & Launch (Week 9)

| Task Name | Team | Start | Hours |

|---|---|---|---|

| Final Integration | A+B | Week 9 | 20 |

| Launch Preparation | A+B | Week 9 | 16 |

| Handover Documentation | A+B | Week 9 | 24 |

| Go-Live | A+B | Week 9 | 8 |

Key Activities for Both Teams:

- Final system integration and testing

- Complete documentation

- Prepare launch communications

- Go-live activities

Practical Tip: Plan a soft launch with a limited user group before full deployment.

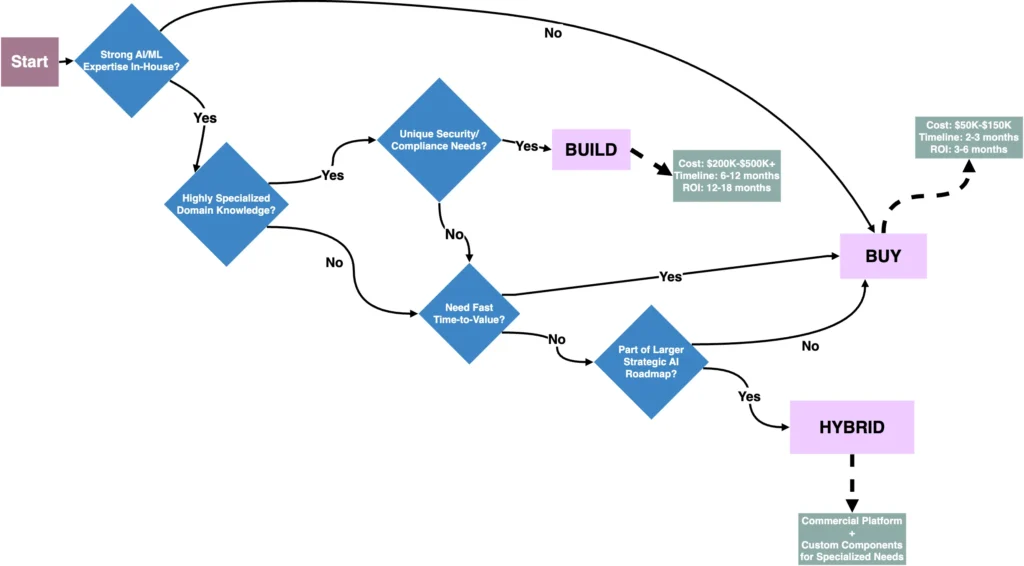

The Build vs. Buy Decision

Let’s break down when to build and when to buy your RAG solution:

When Building Makes Sense

Consider the build approach if:

- You have highly specialized domain knowledge that commercial solutions don’t adequately address

- Your IT team has strong AI/ML capabilities and experience with vector databases, embeddings, and LLM integration

- You have unique security or compliance requirements that off-the-shelf solutions can’t satisfy

- You need deep integration with proprietary systems that lack standard APIs or connectors

- You have a long-term strategic AI roadmap where RAG is just one component of a broader AI initiative

- You anticipate significant scaling needs where pay-per-user or pay-per-query commercial models would become prohibitively expensive

- You require full control over the roadmap and features to maintain competitive advantage

Build Approach Example: A Bell case study documented by Evidently AI shows how they implemented a modular document embedding pipeline to ensure employees have access to up-to-date company policies. This custom approach addressed their specific knowledge management requirements and integration needs.

When Buying Makes Sense

Consider the buy approach if:

- You need faster time-to-value (2-3 months vs. 6-12 months for custom development)

- You have limited in-house AI/ML expertise

- You need proven reliability with established platforms

- Maintenance and updates are a concern

- Your use cases align with common RAG applications without highly specialized requirements

Buy Approach Example: According to Vectara’s implementation research, mid-market companies can avoid “vendor chaos” by leveraging RAG-as-a-service solutions. These platforms eliminate the complexities of managing multiple vendors and their respective infrastructure requirements.

Cost Comparison

| Factor | Build Approach | Buy Approach |

|---|---|---|

| Initial Development | $500,000+ | $150,000 |

| Implementation Timeline | 6-12 months | 2-3 months |

| Ongoing Annual Maintenance | 20-30% of initial development ($40,000-150,000/yr) | Subscription costs ($36,000-120,000/yr) |

| 3-Year Total Cost of Ownership | $320,000-950,000 | $158,000-510,000 |

| 5-Year Total Cost of Ownership | $440,000-1,300,000 | $230,000-750,000 |

| Required IT Expertise | High (ML engineers, data scientists) | Moderate (integration specialists) |

| Time to Positive ROI | 12-18 months | 3-6 months |

| Flexibility for Customization | High – exact fit to business needs | Limited to vendor capabilities |

| Scalability | Unlimited – pay only for infrastructure | Often tier-based pricing with user/query limits |

| Ownership/IP | Complete ownership of solution | Dependent on vendor platform |

| Long-term Control | Full control over feature roadmap | Subject to vendor pricing and feature changes |

Important Note: Run these numbers for yourself with your own case, under your own circumstances. Maybe they lean the other way, cheaper for you long term if you build it for yourself. Just please don’t underestimate what it will cost to keep up a system like this – if you haven’t done a few projects, ask people who have.

Even if you think you want to build, do yourself a favor and buy first – do a hybrid approach. See the next section.

Here’s a hot tip – over 50% of your headache, and maybe even more of your spend, will be to deal with data issues.

The Hybrid Approach: A Mid-Market Sweet Spot

Many successful mid-market RAG implementations take a hybrid approach that balances speed-to-market with long-term control and cost optimization:

- Start with a commercial platform for rapid deployment and initial value capture

- Build custom components only for specific high-value, specialized needs

- Use APIs and extension points provided by the commercial platform rather than building from scratch

- Plan for potential migration to more custom components over time as usage scales and expertise grows

This approach provides the best of both worlds: rapid deployment and proven reliability of commercial platforms with the flexibility to address specific needs through targeted customization. It also creates a natural evolution path from “quick win” to “strategic advantage” as your organization’s AI maturity increases.

Hybrid Approach Example: Ramp’s customer classification system demonstrates how a mid-market company built an in-house RAG-based system to standardize customer classification, initially leveraging commercial components for core functionality. Over time, they replaced vendor components with custom solutions in high-value areas, maintaining standardization while addressing their specific business needs and controlling long-term costs.

Common Pitfalls to Avoid

- Scope Creep: Stick to your initial use case and resist the temptation to add “just one more feature”

- Data Perfectionism: Don’t wait for perfect data—start with what you have and improve iteratively

- Over-engineering: For your first implementation, simpler is better

- Ignoring User Feedback: The system exists to serve users, not to showcase technology

- Underestimating Data Challenges: Data quality, access, and processing will likely be your biggest hurdles

Final Thoughts

Remember, the goal is to have a working system within 60 days. It won’t be perfect, but it will be valuable. The A/B team leapfrog approach gives you the best chance of meeting this timeline while creating a system that delivers real business value.

The most important thing is to start, learn, and iterate. Your second RAG implementation will be dramatically better than your first—but you can’t get to the second without completing the first.

So, set your 60-day clock, assemble your teams, and get moving. The water’s fine… wait, I promised no water analogies. Let’s just say: the future is waiting, and it’s only 60 days away.