I. Introduction

What makes a company who it is? (No, really, this isn’t some ‘how many angels can dance’ kind of question.). I would argue that there’s several things – corporate culture, leadership, product set, history, and probably a few more that you can think of. And there’s one thing that every single one of those things shares – the data collected over time.

You have the HR data, you have the product performance data; you have the customer searches; you have the customer support; you have data, data, data. I don’t know if you remember, but I remember (as does Pepperidge Farm), a time probably 15 years ago (maybe 20?) where we were all promised that if we just saved all this data, that we’d be able to turn it into money somehow. Blah blah behavior blah blah advertising blah, etc.

And so companies collected that data, and they horded it deep underground, in seismically safe, climate controlled locations. Imagine Smaug and his gold. Except less gold (so far) and, well, lots less dragon.

And we developed terminology for larger (and more disperse) ways to store data. From files to databases. From databases to data warehouses. From data warehouses to data lakes. And like a lake in the rain, the data collected flooded up over our ankles. Then our knees. Water is vital to life, but it will suffocate you if you’re under it. (Go write that down.)

And we found BI applications, analytics that were useful. (Of course, it’s a bazillion dollar industry.) Now, I will not promise to be Moses here. Parting the proverbial Red Sea. Nor am I Jesus. (Oh, the water analogies, they just keep on giving.) But I think that I’ve got something that at worst (if you do it right) is like having a pair of kids’ water wings, something that won’t, by itself, keep your company afloat, but that can offer a positive, albeit small benefit.

But, if done right, done in many places, and with a strong tailwind of luck, will be more like a surfboard, and you like Jeff Clark – surfing Mavericks alone for 15 years before the other crazy big wave surfers came to his spot.

What is Retrieval Augmented Generation? A Business-Focused Explanation

Large language models are the core of language-based AI. They are a technological feat that is the sort of thing that brings to mind the famous 3rd law of Arthur C. Clarke’s eponymous “Clarke’s 3 laws”:

Any sufficiently advanced technology is indistinguishable from magic

But like with magic, (both stage and book), there’s a gotcha.

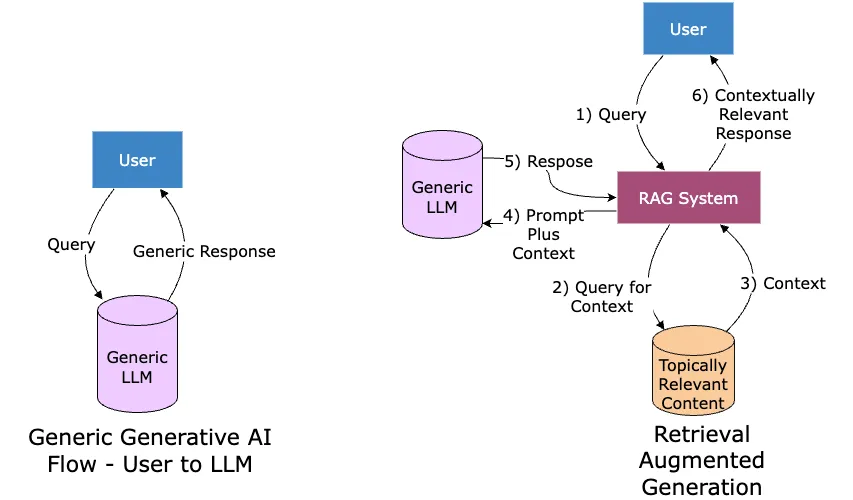

LLMs suffer from two major weaknesses – they are stuck in time, and they have no access to private/proprietary information. And thus we introduce the magic (science!) of Retrieval-Augmented Generation (RAG). RAG will help us tackle key issues like accuracy, data privacy, and cost, all while significantly helping simplify LLM integrations – despite adding more components.

RAG also puts control back in the hands of the user, of the business – bringing to bear the information they have at hand while using the semantic and syntactic knowledge of a true LLM, without having to make significant changes to that core LLM to make it useful.

How RAG Works in Plain Language

You ask a question (e.g., “What were the terms of our 2022 contract with Acme Corp?”)

RAG searches through your data (documents, databases, knowledge bases)

It finds the most relevant information (the actual contract terms)

It uses AI to generate a helpful response based on the retrieved information

You get an accurate answer grounded in your actual business data

Imagine asking a traditional AI for help with a specific company policy. It tries its best but might give you a generic answer or even “hallucinate” details that sound plausible but are incorrect—you’ve probably seen these AI hallucinations firsthand. But with RAG? It can combine the information from the right page from your company’s policy handbook with the LLM-based generative AI smarts to summarize the point clearly and specifically. The result is both accurate (because it’s based on your actual documents) and helpful (because the AI presents the information in a clear, digestible way).

Think of RAG as a “smart librarian” for your business. Instead of relying solely on generative AI to create responses (like most large language models), RAG first “retrieves” relevant information from your existing data and then uses AI to craft an interesting, accurate, and context-driven response.

That “Topically Relevant Content” – it can be *anything* – it can even be another LLM. It can be the entirety of the web, but searched based on an up-to-date index combined other tricks that give absolutely current results. (I use this technique quite a bit.) It can be support docs, it can be source code, it can be your emails, it can be Shakespeare’s Sonnets.

Why would you do that, you ask? It’s not like he’s making more of them, you continue. True! But, sometimes, with an LLM, it’s really good to absolutely shove its face into the idea that you’re trying to convey, and if that idea involves something having to do with sonnets or Shakespeare, then, it’s probably not going to hurt you to do this.

I have a book coming out sometime around the end of March, the first in a series of 3 (so far), all covering “Generative AI for the Curious Businessperson”. The first being “Transformers to Agentic Workflows, How to Think About GenAI and the Problems it Can and Cannot Solve”. This is the beginning of the chapter on RAG, from the second (drafted, but as yet unnamed) book 2. Here’s some examples…

“We definitely would have put more thought into the name had we known our work would become so widespread. We always planned to have a nicer sounding name, but when it came time to write the paper, no one had a better idea” — Patrick Lewis, Lead Author

Retrieval Augmented Generation combines the linguistic, logic, and cognitive strengths of large language models (LLMs) with current, proprietary, and/or specialized data. This leads to more comprehensive and informative outputs. One might say, more useful.

Examples:

Legal: pull in cases of interest as they evolves over the course of a year. Even if you’re using a legal-focused LLM, they’re going to have to rebuild the LLM itself periodically, which means there will always be a lag between the law and the LLM. Rebuilding even a moderately sized LLM is an enormous effort, with a significant cost just to rent the cloud GPUs. Keeping a database of cases is trivial by comparison.

Customer Support: no LLM is going to know as much as you about your customers, your internal engineering debugging process, your hard-won competitive intel. RAG works by bridging the gap between LLMs and external knowledge bases. Here’s a simplified breakdown:

Information Retrieval: When presented with a query, RAG first uses information retrieval techniques to find relevant information from an internal/proprietary/private knowledge base (like a database or a collection of documents). This could be done with keywords, semantic search, or any of a bunch of other methods.

Knowledge Integration: RAG feeds the information PLUS the query into a large language model (LLM).

Content Generation: The LLM leverages both the retrieved information and its own internal knowledge to generate a comprehensive and accurate response. In essence, RAG empowers LLMs to access and incorporate external information into their reasoning and generation process. This makes them less prone to hallucinations (producing inaccurate or fabricated information) and provides them with a wider range of information to draw upon.

Let’s Talk More About Why

RAG is important for mid-market enterprises. And in this article and the two following it, we’re going to go from definition through validation of your implementation. We’ll stop in benefits, scoping, testing, and some of my best “best practices.”

Put in very marketing-y optimist terms: RAG can bring genAI in line with your business, and thus RAG can help you streamline operations, improve decision-making, enhance customer experience, or do more with less.

The following set of case studies was developed in partnership with: Perplexity. Not the people there, but the “Deep Research” feature – which is mostly fantastic, but sometimes deeply weird (foreshadowing! Could I say something like that and not deliver? No, I’d never do that to you. As much as you might want me to.)

Without further ado, what are some benefits that real companies have seen with real implementations? (Sidenote, what I had it do was go and find all the RAG technology providers it could find, and then snarf up all of the case studies. Savagely brilliant. It makes it look like I’m doing work! )

1. Overcoming the “Data-Rich but Insights-Poor” Dilemma

Case Study: U.S. Financial Services Firm (Coditude)

A mid-sized financial services firm specializing in investment strategies faced delays in analyzing terabytes of transactional data, regulatory filings, and market reports. Manual processes caused critical insights about emerging risks and opportunities to remain buried. Coditude implemented a RAG system that dynamically retrieved and cross-referenced data from CRM platforms, Bloomberg terminals, and internal compliance databases. The solution reduced time-to-insight by 72%, enabling analysts to detect patterns in sector-specific risks (e.g., energy market volatility) that were previously overlooked.

Case Study: Manufacturing Knowledge Consolidation (Imbrace)

A global manufacturer with decades of unstructured data across ERP systems, PDF manuals, and legacy databases struggled to resolve equipment failures. Engineers wasted 15–20 hours weekly searching for technical specifications. Imbrace’s RAG platform unified these sources into a vectorized knowledge graph, allowing natural language queries like, “List all maintenance logs for hydraulic press Model X since 2022.” This reduced mean-time-to-repair by 40% and cut onboarding time for new technicians by 35%.

Technical Analysis

RAG addresses data fragmentation by combining dense retrieval (e.g., FAISS indexing) with contextual generation, enabling semantic search across disparate formats. For the financial firm, hybrid search algorithms prioritized recent SEC filings while filtering outdated entries, ensuring analysts received time-sensitive insights from Coditude and Enterprise Knowledge.

2. Competing with Larger Enterprises Through Agile AI Adoption

Case Study: Regional Retail Chain (MyScale)

A 150-store retail chain lacked the budget for enterprise-scale AI infrastructure but needed to match competitors’ personalized marketing. MyScale deployed a RAG recommendation system that analyzed customer purchase histories, social media trends, and inventory data. By generating real-time promotions (e.g., “Customers who bought Product A also viewed Product B”), the chain achieved a 22% lift in cross-sales, rivaling Fortune 500 retailers’ campaigns at 30% lower compute costs.

Case Study: Mid-Sized Logistics Provider (Gravity9)

Facing competition from global logistics giants, a European freight company used Gravity9’s RAG solution to optimize route planning. The system ingested weather APIs, real-time traffic data, and historical delivery logs to generate dynamic routing suggestions. This reduced fuel costs by 18% and improved on-time delivery rates to 97%, narrowing the gap with larger competitors’ AI-driven platforms.

Strategic Advantage

RAG’s modular architecture allows mid-market firms to leverage cloud-based vector databases[^1] (e.g., Pinecone, Chroma) and off-the-shelf LLMs (e.g., GPT-4, Llama 3), avoiding the need for proprietary models as detailed by AWS and Monte Carlo Data. This levels the playing field, as seen in the logistics case, where the company achieved enterprise-grade analytics without a dedicated data science team.

[^1]: I know, I’ve said “vector database” twice now without really defining it. Don’t worry if you don’t know what it is – just think of it as a thing that lets you store unstructured data (text/voice/video/pictures) in a way that number-based structured systems can use them. They get used lots to store the stuff that gets retrieved for your augmented generation. I promise that I’ll subject you to some lectures about vector databases. Plus you could always buy the books!

3. Bridging the AI Expertise Gap

Case Study: Healthcare Provider (Invisible Technologies)

A mid-west hospital network sought AI-driven patient triage but lacked ML engineers. Invisible Technologies implemented a RAG chatbot that retrieved symptoms from EHRs and generated diagnostic suggestions aligned with Mayo Clinic guidelines. Nurses achieved 90% accuracy in preliminary diagnoses, compared to 65% with manual methods, despite no prior AI training.

Case Study: Legal Firm (NineTwoThree)

A boutique law firm used NineTwoThree’s no-code RAG platform to automate contract analysis. The system extracted clauses from past case files and generated compliance summaries, reducing junior lawyers’ review time by 50%. Crucially, the firm avoided hiring NLP specialists by using pre-configured retrieval pipelines.

As an aside, and I’m not saying all law firms are like this (really, don’t sue me) – but when I was doing NLP, I found law firms to be rather resistant to any sort of efficiency improvement that they’d get from implementing a technology. It’s almost as if there was a financial reason for it. Strange.

Implementation Insights

Vendors like AWS and MyScale offer managed RAG services, handling embedding models and chunking strategies. This allows firms to focus on prompt engineering rather than infrastructure, as demonstrated by the legal team’s use of natural language queries like, “Highlight force majeure clauses in 2023 contracts.”

4. Cost-Efficient Solutions for Scalable Growth

Case Study: E-Commerce Platform (MyScale)

An online retailer reduced cloud costs by 30% using MyScale’s RAG system, which replaced batch-processing pipelines with on-demand retrieval during peak traffic. For example, product descriptions were generated dynamically using real-time inventory data, eliminating redundant storage of pre-written content.

Case Study: Banking Sector (Faktion)

A community bank used Faktion’s RAG framework to automate loan approval reports. By retrieving applicant credit histories and generating risk summaries, the bank cut processing costs by $45K/month while maintaining compliance as documented by Enterprise Knowledge.

Economic Impact

RAG’s pay-per-query pricing (e.g., AWS Bedrock) contrasts with fixed-cost model training, making it viable for budget-conscious firms according to AWS and Monte Carlo Data. The e-commerce case illustrates how stateless generation reduces idle compute expenses, aligning costs with actual usage.

5. Expanding RAG’s Value: Emerging Applications

Enhancing Customer Experience (Imbrace)

A telecom company integrated RAG with WhatsApp and Slack, enabling chatbots to retrieve real-time outage maps and generate personalized repair timelines as detailed by Imbrace. This reduced call center volume by 40% and improved CSAT scores by 28 points.

Regulatory Compliance (Enterprise Knowledge)

A pharmaceutical company automated FDA submission checks using RAG to cross-reference trial data with regulatory databases. The system flagged 95% of compliance gaps pre-submission, versus 70% manually, according to Enterprise Knowledge.

Knowledge Retention (Xinthe)

A tech startup used Xinthe’s RAG tools to convert fragmented Slack conversations into a searchable knowledge base, reducing duplicate queries by 60%.

Wrapping This Up and Transitioning to the Next Article

So, in this article, we introduced the concept of Retrieval Augmented Generation, the unfortunately acronym’ed “RAG.” RAG integrates knowledge that is topical (in some way) to the query and the context of the query. Topical could be knowledge that is current – news sources, knowledge that is domain specific like company support documents or competitor battlecards. The relevant topical knowledge is retrieved and then sent along with the original prompt to the large language model that is at the heart of the generative AI system. Using a combination of the original query and the topical information, the generative AI system produces a much more powerful, relevant response than it would have otherwise. One might say that the generation was… augmented…by the retrieval.

See you in article 2…. The Quantifiable Benefit of RAG.